A guide to generative AI: from how it works to best practices in model training

Introduction

Just 12 months ago, OpenAI led a tech revolution that pushed generative AI into the broader public consciousness by launching ChatGPT. This was closely followed by Google launching its own conversational AI tool with Bard, and more recently announcing a new Large Language Model (LLM) called Gemini. New generative AI offshoots are continuing to be introduced by consumer and enterprise-focused businesses at an ever-increasing pace.

In this guide to Generative AI we will share the history of this technology, how it works, some practical applications of the technology, and considerations for Generative AI model training.

01 The road to generative AI

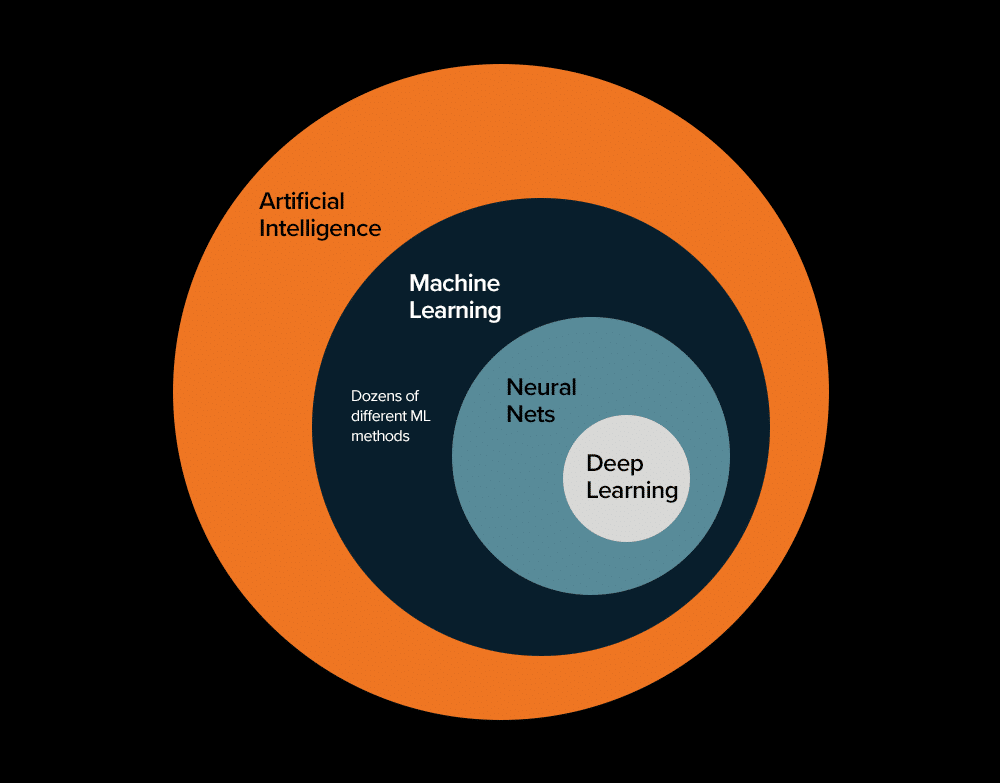

Artificial intelligence (AI) is a hot topic these days, along with concepts such as machine learning, deep learning, and, more recently, generative AI. But the concept of AI dates back to the 1950’s and 60’s, when it got its start as nothing more than some rather finite if-then statements.

Before long, two camps of computer engineers emerged that headed down divergent paths: one camp designed and built tools to help humans be more productive; the other designed and built tools that could outperform humans in certain tasks.

Throughout the years, the development and advancement of AI has been based upon a computer’s ability to learn, or to be trained. There are two approaches to this process, with the second building on the first:

- Machine learning – Technological advances led the way to a formalized and optimized method for training computers how to perform repetitive tasks, such as analyzing photos. As a subfield of AI, machine learning is the primary way that most people interact with AI. Training a machine learning algorithm to produce a model that’s capable of performing complex tasks typically requires guidance from one or more people.

- Deep learning – Automating some of the processes typically performed by machine learning has eliminated the need for human participation in the training process. With deep learning algorithms, machine learning models can handle larger amounts of data, including social media content and other unstructured data. Neural networks, a type of deep learning algorithm that mimics the human brain, can perform functions in a fraction of the time it takes a manually-trained algorithm.

02 The history of generative AI

It’s only in the last year that the broader public has become aware of generative AI, but it’s been around since the 1950s. That’s when Alan Turing developed the Turing test, which measures a machine’s ability to think. This was closely followed by the creation of the first trainable neural network in 1957.

For the next four decades, computer scientists and researchers developed increasingly advanced neural networks as well as the deep learning techniques through which such networks can be trained. However, these advances were data and power hungry, and it wasn’t until the late 2000s that there was enough training data and computational power available to (1) outperform traditional machine learning methods, and (2) enable the use of deep learning on a commercial scale.

In 2014, a group of researchers at the Universite de Montreal created the first generative adversarial network (GAN) modeling approach, in which two neural networks “compete” against each other. This enabled neural networks to create images, video and audio. Concurrently, in late 2013, Variational Autoencoders (VAEs) were also introduced for generative modeling, extending the autoencoder technique where two networks, an encoder and a decoder, are also trained jointly.

Since then, advances in deep learning, neural networks and the development and adoption of large language and multi-modal models have transformed generative AI. Instead of just being highly-specialized tools that focus on audio, imagery, or words, they’ve become multi-purpose technologies that excel within and across all of these media.

Using natural language processing, generative AI can create photographs, paintings, audio, video, computer code, and anything that involves words. Generative AI has also proven itself smart enough to pass many of the tests that are essential for pursuing a career in law, medicine or business. It even passed the introductory and advanced exams required to become a Sommelier.

03 How generative AI works

Like any other AI, the deep learning models underpinning generative AI tools must be trained. ChatGPT, BARD and DALL-E were each trained in a controlled environment by scouring every nook and cranny of the Internet, studying works of art in all genres, and consuming the written word—everything from garden variety social media posts to works of classical literature. And whenever one of these tools is released to the public, the training continues using the search prompts of end users.

This training has enabled generative AI tools to deepen their understanding of the relationships between different types of data, such as the written word and visual concepts. They use that understanding to problem solve, improve, and create different, better pieces of content.

04 Traditional AI vs. generative AI

Traditional AI tools are designed to help people organize, classify and make sense of data so they can make better decisions. They’re also used to perform predictive analytics that help organizations to determine the best path forward.

Generative AI specializes in creating new content based on the training data it’s consumed, and an end user’s directions or prompt. Generative AI’s outputs can provide tremendous value in helping to jump start the creative process, broadening their horizons of what’s possible, or simply creating a rough draft from which to work.

State-of-the-art generative AI models follow four modeling techniques:

- Diffusion models - iteratively add noise (forward diffusion) to data, and then remove the noise (reverse diffusion). Training neural networks to learn the reverse diffusion process (noise removal) can help to obtain multiple models that are capable of generating higher quality images, text and other content types (from noise or lower-quality content).

- Generative adversarial networks (GAN) - pit two neural networks against each other — a “generator” network uses deep learning to create synthetic data similar to existing data, and another “discriminator” network tries to distinguish the artificially-generated data from real data. The networks are trained iteratively to out-complete each other (hence, “adversarial”) until the discriminator network’s classification accuracy converges to near 50%, i.e., same as flipping a coin. Some applications include synthesizing images, generating videos and multimedia content, and detecting anomalies.

- Transformer-based models - analyze relationships in sequential data in order to learn context and meaning across multiple time scales, and track the connection of particular words, strings of code, proteins, and other elements across pages, chapters, or even books. Most useful in analyzing and generating written content. GPT models are transformer-based.

- Variational autoencoders - uses two neural networks, one (encoder) which works to represent data more efficiently (the compressed features are referred to as latent representation), and another (decoder) that works to recreate/generate the data from the compressed latent representation. Useful for generating images, music and video; detecting anomalies; analyzing security and processing signals.

05 Generative AI tools on the market

06 What are the advantages of generative AI?

Chief among the advantages of generative AI is the ability to automate or support functions such as customer service, to create and customize marketing content more efficiently, and to streamline research and development. In each of these cases, organizations can reduce labor and focus their energy and resources on other areas of business.

A report from McKinsey suggests that generative AI could generate as much as $4.4 trillion in additional value to the global economy by addressing common business challenges in 16 business functions, with the biggest impact being felt in banking, advanced technological development, and life science research.

Beyond that, generative AI could also help in areas such as augmenting datasets when real-life data is in short supply, revising content to make it more relevant, and analyzing data to detect anomalies, such as security threats or product deficiencies.

Generative AI's ability to augment human capability could also make it especially effective in helping pharmaceutical researchers develop new drugs, helping fashion designers envision the appearance of a new outfit, or in generating new training models in fields such as robotics and autonomous vehicles.

07 What are the practical business applications of generative AI?

For all of the potential of generative AI, many organizations are still assessing how to use the technology in their day-to-day business operations. Others are testing the waters by getting assistance with projects such as writing email, software code and blog posts; creating pictures, audio, and video; brainstorming; and analyzing data. Meanwhile, companies such as Adobe, Microsoft, and WordPress are integrating generative AI tools into their software products to help employees get more done.

McKinsey forecasts that 75 percent of the business value that generative AI delivers will fall into four areas:

- Customer operations

- Marketing & sales

- Software engineering

- Research & development

For example, in customer service operations, generative AI chatbots could help address customer issues that are the easiest to resolve, freeing up human agents to assist with more complex technical issues. Among the cost savings and performance improvements that McKinsey found in its study was a 25 percent reduction in agent attrition and in requests to speak with customer service managers.

In research & development, one use of generative AI is to create drug candidates, accelerating the design of new treatments. Generative AI is already being used by biotech firms to develop new medicines, and it has similar potential in areas such as designing electrical circuits and large-scale physical products. McKinsey believes that generative AI could improve productivity, resulting in a 10-15 percent reduction in R&D costs.

08 Model training for generative AI

Many organizations are developing generative AI applications to manage, document, and formalize the tribal knowledge trapped inside their companies (whether as policy manuals or employees’ first-hand knowledge). Ensuring that these tools can accurately search and catalog this information starts by training the deep learning algorithms that power them—just as the machine learning algorithms that power traditional AI need to be trained.

Text-based generative AI tools are powered by large language models (a.k.a., neural networks), a type of deep learning algorithm that is trained by using a massive amount of text data. The massive size of the data set gives large language models the capacity to learn and make informed predictions or choices, much like people can. This is one of the biggest reasons why organizations are interested in training generative AI to meet their particular needs.

There are three primary approaches to training a large language model with proprietary data, and there are pros and cons with each:

- Create and train a domain-specific large language model (LLM) from the ground up — creates a model tailored to your needs, but expensive and resource intensive

- Fine-tune a pre-existing LLM using domain-specific data and documents — requires less compute power as it only adapts a trained model’s parameters, but can be expensive to train

- Prompt-tune an existing LLM using domain-specific prompts — requires less compute power and data than the other two methods, doesn’t change the model parameters in any way, but requires specialized data science (prompt engineering) expertise

09 The importance of human involvement in generative AI

Ensuring that your generative AI application is accurate and reliable depends on human involvement in multiple areas including:

- Providing natural/native speech inputs

- Providing inputs from diverse groups of individuals

- Judgment for unpredictable or new scenarios

- Providing nuanced inputs such as creative writing

- Fine tuning models for domain specificity

- Evaluating the output of the solution for bias, accuracy or toxic language

WIth human involvement in the input phase and the evaluation phase, Generative AI solutions are more likely to be developed in a safe and ethical manner.

10 Mitigating risk in generative AI

Some risks are baked into generative AI, such as intellectual property rights, security, privacy, and unconscious bias. The power of large language models stems from the sheer volume of information they’ve scooped in from the internet, which informs generative AI’s response.

Findings from a study by Boston Consulting Group and MIT Sloan Management Review found that more than 60 percent of AI practitioners are inadequately prepared to deal with such risk—in part because of the opaque nature in which data is gathered, misplaced trust in generative AI’s abilities, and a false sense that its decisions and recommendations are free of any bias.

At the outset of the training process, you must determine the business risks to guard against. That will help determine how stringent you must be with training and quality control. For example, companies that operate in the medical industry need to adopt an extremely stringent process that ensures the health and welfare of patients, whereas a hospitality provider could adopt governance procedures that are more flexible.

Rather than deploying a generative AI foundation model (such as ChatGPT) right out of the box, you should ensure that you have robust governance measures in place, incorporate human-in-the-loop reinforcement learning and reassess your responsible AI policies to account for advances such as generative AI.

There’s no denying that the power and adaptability of generative AI is unlike anything we’ve seen since the dawn of the digital computing age in the 1950’s. But like any such tool, a requisite amount of effort, adaptation, and training is required before it becomes useful.

At LXT, we provide various services for generative AI including fine tuning and data evaluation. Learn more here.